-

Written by

Written by -

CategoryAI & Data Analytics

-

Published DateMay 20, 2025

Discover the benefits of versioning your work in Databricks. Learn how to turn complex processes into organized, collaborative workflows that enhance the quality of your data projects. By following version control best practices, data professionals can track changes, collaborate more efficiently, avoid code conflicts, and ensure the reproducibility of their data pipelines.

In the world of data engineering, combining powerful version control tools with Databricks’ analytical capabilities can significantly boost team productivity and system reliability. Find out how to implement an effective version control system in Databricks and deliver meaningful results for your data team.

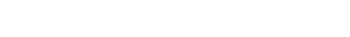

What Is Code Versioning and Why Is It Essential in Databricks?

Code versioning is a system that tracks changes to files over time, allowing you to retrieve specific versions whenever needed. In platforms like Databricks, where notebooks, scripts, and jobs are central to data workflows, versioning becomes even more critical.

Challenges of Developing Without Version Control in Databricks

Without a proper versioning system in place, teams using Databricks face issues such as:

- Accidental loss of valuable code when notebooks are overwritten

- Difficulty tracking who made specific changes and why

- Inability to roll back to previous versions when bugs are introduced

- Complex collaboration when multiple analysts and engineers are working at the same time

- Inconsistencies between development, testing, and production environments

As one data engineer recently shared: “We lost two full days of work when a production notebook was accidentally overwritten. Version control would’ve saved us that headache”.

Benefits of Proper Version Control

Implementing strong versioning practices brings several key advantages:

- Full traceability of changes (who made them, when, and why)

- Ability to quickly recover previous versions

- Parallel development without team members interfering with each other’s work

- Consistent deployment processes across environments

- Compliance with governance and audit requirements

Integrating Databricks with Git: The Foundation of Version Control

Connecting Databricks to Git systems like GitHub, GitLab, or Azure DevOps lays the groundwork for robust versioning. This integration brings the power of distributed version control to your data workflows.

Setting Up Git Integration in Databricks

To set up Git integration in Databricks, follow these steps:

- Configure Git authentication using personal access tokens (PATs) or SSH keys

- Link your Databricks workspace to the desired Git repository

- Set proper permissions for repository access

Here’s an example of how to configure it using the Databricks REST API:

import requests

import json

databricks_instance = “https://seuworkspace.databricks.com”

api_token = “api_token”

headers = {“Authorization”: f”Bearer {api_token}“}

git_config = {

“personal_access_token”: “personal_access_token”,

“git_username”: “git_username”,

“git_provider”: “gitHub” # or “gitLab”,

# “azureDevOpsServices”

# “bitbucketCloud”

}

response = requests.patch(

f”{databricks_instance}/api/2.0/git-credentials”,

headers=headers,

data=json.dumps(git_config)

)

print(f”status: {response.status_code}“)

print(f”response: {response.json()}“)

Common Git Workflows in Databricks

The most effective Git workflows for Databricks teams include:

Git Flow

Ideal for larger teams with regular release cycles, Git Flow defines specific branches:

- main: stable production code

- develop: integration for the next release

- feature/*: development of new features

- release/*: release preparation

- hotfix/*: urgent production fixes

GitHub Flow

Simpler and better suited for continuous deployment:

- The main branch always contains production-ready code

- Feature branches for development

- Pull requests for code review before merging

GitLab Flow

Adds environment-specific branches to GitHub Flow:

- main → staging → production

- Feature branches are merged into main

- Controlled promotion between environments

Git Folders: Native Version Control in the Databricks Platform

Git Folders (formerly known as Databricks Repos) are a native feature that makes it easier to work with Git repositories directly within the Databricks interface, bringing version control into the platform itself.

Key Features of Git Folders

- Visual interface for common Git operations

- Automatic sync between the Git repo and your workspace

- Branch management within the Databricks environment

- Commit history and version diffs

- Support for multiple Git providers (GitHub, GitLab, Azure DevOps, Bitbucket)

How to Set Up and Use Git Folders

To get started with Git Folders in Databricks:

- Go to the Git Folders section in the Databricks sidebar

- Click Create Git Folder

- Enter the Git repository URL and your credentials

- Clone the repository into your workspace

Once configured, you can:

- Switch between existing branches or create new ones

- Commit changes directly to the repository

- View diffs between versions

- Pull updates from the remote repository

- Push local changes back to the remote repository

Organizing Code with Git Folders

An effective structure for organizing data projects within Git Folders might look like this:

project/

|– notebooks/

| |– bronze/ # ingestion and initial validation

| |– silver/ # intermediate transformations

| |– gold/ # analytics layer and modeling

|– conf/ # environment-specific configurations

|– jobs/ # job definitions

|– libraries/ # shared libraries

|– tests/ # automated tests

|– README.md

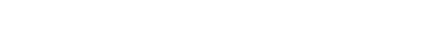

Best Practices for Versioning in Databricks

Following these practices will ensure a robust and efficient version control process.

Repository Structure and Code Organization

- Create separate repositories for distinct projects

- Organize notebooks into logical directories based on the medallion architecture (bronze/silver/gold)

- Keep configuration files separate from code

- Use README files to document the structure and purpose of each directory

Branching Strategies for Different Environments

An effective strategy includes dedicated branches for:

- Development (develop)

- Quality assurance (qa)

- Staging (staging)

- Production (main or production)

Implement quality gates between environments using pull requests with required approvals.

Code Review and Pull Requests

Your code review process should include:

- Assigned reviewers with domain expertise

- A standardized review checklist

- Automated quality checks

- Automated tests run before approval

Semantic Versioning for Libraries and Releases

Adopt semantic versioning (SemVer) for all releases:

- Major version (X.0.0): Breaking changes

- Minor version (0.X.0): Backward-compatible feature additions

- Patch version (0.0.X): Bug fixes

CI/CD Integration with Versioning

Integrate your versioning process with CI/CD pipelines:

# example of a CI/CD pipeline for Databricks in Azure DevOps

trigger:

branches:

include:

– develop

– main

stages:

– stage: BuildAndTest

jobs:

– job: UnitTests

steps:

– script: pytest tests/unit

displayName: ‘Run Unit Tests’

– stage: DeployToQA

condition: and(succeeded(), eq(variables[‘Build.SourceBranch’], ‘refs/heads/develop’))

jobs:

– job: DeployNotebooks

steps:

– task: DatabricksDeployment@0

inputs:

databricksAccessToken: $(databricks_qa_token)

workspaceUrl: ‘https://qa-workspace.databricks.com’

notebooksFolderPath: ‘$(Build.SourcesDirectory)/notebooks’

notebooksTargetPath: ‘/Shared/project-name’

– stage: DeployToProd

condition: and(succeeded(), eq(variables[‘Build.SourceBranch’], ‘refs/heads/main’))

jobs:

– job: DeployNotebooks

steps:

– task: DatabricksDeployment@0

inputs:

databricksAccessToken: $(databricks_prod_token)

workspaceUrl: ‘https://prod-workspace.databricks.com’

notebooksFolderPath: ‘$(Build.SourcesDirectory)/notebooks’

notebooksTargetPath: ‘/Shared/project-name’

Complementary Tools for Versioning in Databricks

To further enhance your version control process, consider using the following complementary tools.

Databricks Notebook Export Utility

This utility allows you to programmatically export notebooks:

import os

from databricks_cli.workspace.api import WorkspaceApi

from databricks_cli.configure.provider import ProfileConfigProvider

from databricks_cli.sdk.api_client import ApiClient

def export_notebooks(source_path, target_dir, format=“SOURCE”):

“””

Exports all notebooks from a Databricks workspace directory to local files.

Args:

source_path: Path of the directory in the Databricks workspace

target_dir: Local directory to save the notebooks

format: Export format (SOURCE, HTML, JUPYTER)

“””

config = ProfileConfigProvider(“DEFAULT”).get_config()

api_client = ApiClient(host=config.host, token=config.token)

workspace_api = WorkspaceApi(api_client)

# list all objects in the directory

objects = workspace_api.list(source_path)

if not os.path.exists(target_dir):

os.makedirs(target_dir)

for obj in objects:

target_path = os.path.join(target_dir, obj.basename)

if obj.object_type == “DIRECTORY”:

# recursively export subdirectories

export_notebooks(obj.path, target_path, format)

elif obj.object_type == “NOTEBOOK”:

# export the notebook

with open(f”{target_path}.py”, “wb”) as f:

content = workspace_api.export(obj.path, format)

f.write(content)

print(f”Exported: {obj.path} -> {target_path}.py”)

# example usage

export_notebooks(“/Shared/Project”, “./backup_notebooks”)

dbx: CLI for Databricks Workflows

dbx is a command-line tool that simplifies:

- Deploying jobs directly from Git

- Running tests in Databricks environments

- Integrating with CI/CD pipelines

Basic installation and setup:

pip install dbx

# initialize a new project

dbx init –template jobs-minimal

# deploy a job

dbx deploy —jobs=job_name –environment=dev

Integrations with Package Managers

For shared Python libraries:

# create package structure

mkdir -p mypackage/mypackage

touch mypackage/setup.py mypackage/mypackage/__init__.py

# in setup.py

from setuptools import setup, find_packages

setup(

name=“mypackage”,

version=“0.1.0”,

packages=find_packages(),

install_requires=[

“pyspark>=3.0.0”,

“delta-spark>=1.0.0”

]

)

# build and upload to cluster

python setup.py sdist bdist_wheel

databricks fs cp ./dist/mypackage-0.1.0.whl dbfs:/FileStore/packages/

Collaborative Development with Version Control in Databricks

Proper versioning is the foundation for efficient collaborative development in Databricks.

Conflict Management and Merge

To effectively manage conflicts:

- Pull regularly to keep your code up to date

- Split large notebooks into smaller units to reduce the chance of conflicts

- Use tools like nbdime to visualize notebook-specific diffs

- Establish clear team conventions for conflict resolution

Documentation Integrated with Version Control

Link your documentation to version control:

- Use docstrings in all functions and notebooks

- Keep a CHANGELOG.md file up to date

- Document architectural decisions using Architecture Decision Records (ADRs)

- Use tools like Sphinx to generate documentation from your code

Training and Versioning Culture

To build a strong versioning culture:

- Provide regular Git training sessions for the entire team

- Create quick-reference guides for common Git operations

- Assign specialized reviewers for different areas of the codebase

- Acknowledge and celebrate versioning best practices

Implementing Version Control in Existing Projects

Migrating existing projects to a version control system requires careful planning.

Migration Strategies

A gradual and safe approach includes:

- Conducting a full inventory of existing notebooks and assets

- Creating the Git repository with a well-defined structure

- Importing notebooks with minimal initial history

- Validating functionality post-migration

- Training the team on the new workflow

Case Study: Optimizing a Data Platform in the Financial Sector

An investment management firm transformed its data infrastructure after identifying critical limitations in its new platform. The project focused on three core pillars: performance optimization, robust governance, and reduction of operational costs.

The implemented approach included:

- A complete restructuring of Databricks to maximize existing resources

- Adoption of an agile delivery model with specialized squads

- Consolidation of tools and elimination of redundancy

This resulted in measurable outcomes such as:

- Speed: Pipeline development became 2x faster

- Reliability: Achieved over 99% system availability

- Cost Savings: Reduced operational costs by $1M annually

- Quality: Enhanced governance with end-to-end observability

This transformation highlights how targeted expertise can turn technical challenges into competitive advantages, laying a solid foundation for sustainable growth in the financial sector.

Conclusion: The Future of Version Control in Databricks

Proper code versioning is essential for data teams working with Databricks. With Git integration, Databricks Repos, and the best practices outlined in this article, your team can collaborate more effectively, securely, and efficiently.

Implementing a robust version control system brings immediate benefits in traceability, code quality, and team productivity. It also lays a strong foundation for adopting advanced practices like Data DevOps and MLOps.

Start versioning your code in Databricks today. Your team will thank you for the clarity, security, and efficiency it brings.

About Indicium

Indicium is a global leader in data and AI services, built to help enterprises solve what matters now and prepare for what comes next. Backed by a 40 million dollar investment and a team of more than 400 certified professionals, we deliver end-to-end solutions across the full data lifecycle. Our proprietary AI-enabled, IndiMesh framework powers every engagement with collective intelligence, proven expertise, and rigorous quality control. Industry leaders like PepsiCo and Bayer trust Indicium to turn complex data challenges into lasting results.

Robson Sampaio

Stay Connected

Get the latest updates and news delivered straight to your inbox.